IBM Maximo: Migration from NFS to S3 Storage: Difference between revisions

| Line 138: | Line 138: | ||

select document,urlname,docinfoid from MAXIMO.docinfo | select document,urlname,docinfoid from MAXIMO.docinfo | ||

== Changet configuration using SQL commands == | |||

UPDATE MAXIMO.MAXPROPVALUE SET PROPVALUE = '<s3 or cos bucket name' WHERE PROPNAME = 'mxe.cosbucketname'; | |||

UPDATE MAXIMO.MAXPROPVALUE SET PROPVALUE = '<s3 or cos access key>' WHERE PROPNAME = 'mxe.cosaccesskey'; | |||

UPDATE MAXIMO.MAXPROPVALUE SET PROPVALUE = '<s3 or cos secret key>' WHERE PROPNAME = 'mxe.cossecretkey'; | |||

UPDATE MAXIMO.MAXPROPVALUE SET PROPVALUE = '<s3 or cos endpoint url>' WHERE PROPNAME = 'mxe.cosendpointuri'; | |||

UPDATE MAXIMO.MAXPROPVALUE SET PROPVALUE = 'cos:doclinks=https://<maximo url>/maximo/oslc/cosdoclink' WHERE PROPNAME = 'mxe.doclink.path01'; | |||

UPDATE MAXIMO.MAXPROPVALUE SET PROPVALUE = 'com.ibm.tivoli.maximo.oslc.provider.COSAttachmentStorage' WHERE PROPNAME = 'mxe.attachmentstorage'; | |||

UPDATE MAXIMO.MAXPROPVALUE SET PROPVALUE = 'cos:doclinks' WHERE PROPNAME = 'mxe.doclink.doctypes.topLevelPaths'; | |||

UPDATE MAXIMO.MAXPROPVALUE SET PROPVALUE = 'cos:doclinks\default' WHERE PROPNAME = 'mxe.doclink.doctypes.defpath'; | |||

UPDATE MAXIMO.MAXPROPVALUE SET PROPVALUE = 'true' WHERE PROPNAME = 'mxe.doclink.securedAttachment'; | |||

= Ver também = | = Ver também = | ||

Revision as of 07:37, 27 February 2024

You can configure IBM Maximo and MAS so that it stores attachments in a Simple Storage Service (S3) cloud object storage.

This is the best option when migrate from EAM to MAS.

In order to migrate your environment to use S3, it is necessary to:

- Create a bucket in S3 storage in order to store your data.

- Setup Maximo application to use S3

- Migrate files from NFS storage to S3

- Change URL on MAXIMO.docinfo table

Create a bucket in S3 storage in order to store your data

Choose your S3 storage

- MinIO: Deploy MinIO as Container

- Amazon S3: Configure Maximo Attachments With Amazon S3

- IBM Cloud: Setup Manage Attachments with IBM Cloud Object Storage S3

Create a bucket

1) Create a bucket. Sample: maximo-doclinks

2) (Access the MinIO console and) create a access token.

The file is something like this:

{

"url":"http://10.1.1.1:9001/api/v1/service-account-credentials",

"accessKey":"VQ...A",

"secretKey":"K4...e",

"api":"s3v4",

"path":"auto"

}

Setup Maximo application to use S3

1) setup Maximo application to use S3

- a. Login into Maximo

- b. Go to System Properties Application

- c. Change configurations

| Header text | Header text |

|---|---|

| mxe.cosaccesskey | This value is the access_key_id described in the bucket. |

| mxe.cosendpointuri | This value is the use-geo endpoint address: https://s3.us.cloud-object-storage.appdomain.cloud |

| mxe.cosbucketname | This value is the name defined in the bucket. |

| mxe.cossecretkey | This value is the secret_access_key described in the bucket. |

| mxe.attachmentstorage |

com.ibm.tivoli.maximo.oslc.provider.COSAttachmentStorage Once this value is set, traditional doclinks will no longer work. To revert, this property must be removed and the server restarted. |

| mxe.doclink.doctypes.defpath | cos:doclinks\default |

| mxe.doclink.doctypes.topLevelPaths | cos:doclinks |

| mxe.doclink.path01 | cos:doclinks=hostname/DOCLINKS |

| mxe.doclink.securedAttachment | True |

3) Restart your Maximo Server JVM or Manage UI\ALL pod (if you are using Maximo Application Suite).

After restart upload a file and check in you S3 storage console

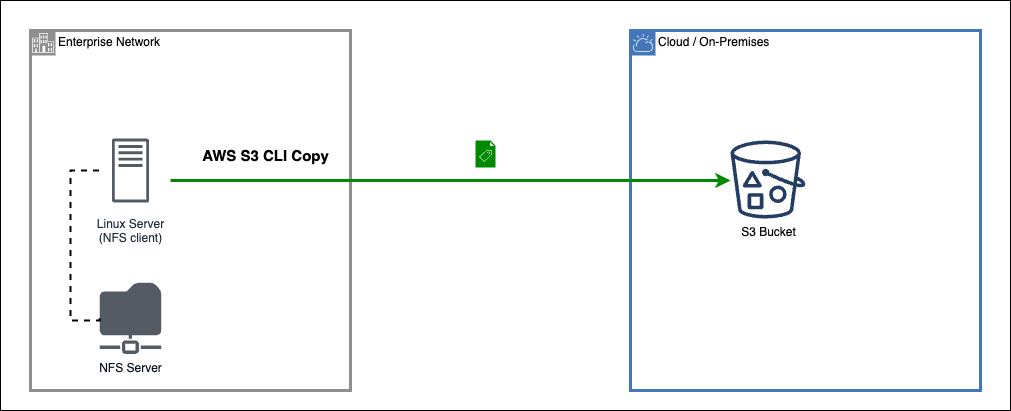

Migrate files from NFS storage to S3

On your EAM server or in a Linux machine that have access to nfs storage

1) Install aws client

2) run command

aws configure

and informe accessKey and secretKey.

3) Copy files from local machine to bucket

aws s3 cp /nfs_path s3://maximo-doclinks/ --endpoint-url http://10.1.1.1:9000 --recursive

for MinIO, I must to add --endpoint-url

you can also use sync

aws s3 sync /nfs_path s3://maximo-doclinks/ --endpoint-url http://10.1.1.1:9000

Other Tips

Sample script to copy files using aws client

#!/bin/bash DOCLINKS_SOURCE_PATH=/nfs_path/ S3_TARGET=s3.target.data S3_BUCKET=maximo-doclinks echo "Start Time" > result.log date >> result.log echo "" aws s3 cp $DOCLINKS_SOURCE_PATH s3://$S3_TARGET/$S3_BUCKET/ --recursive >> files_copied.log echo "Finish Time" >> result.log date >> result.log echo "Data transfered to S3 Bucket complete"

DRAFT: Using rclone

Rclone syncs your files to cloud storage. Details in https://rclone.org

#!/bin/bash DOCLINKS_SOURCE_PATH=/nfs_path/ S3_TARGET=s3.target.data S3_BUCKET=maximo-doclinks rclone sync $DOCLINKS_SOURCE_PATH $S3_TARGET/$S3_BUCKET --log-file=rclone-migration.log --log-level INFO --fast-list --ignore-existing --retries 1 --transfers 64 --checkers 128 --delete-before

Check URLs on MAXIMO.docinfo table

select document,urlname,docinfoid from MAXIMO.docinfo

Changet configuration using SQL commands

UPDATE MAXIMO.MAXPROPVALUE SET PROPVALUE = '<s3 or cos bucket name' WHERE PROPNAME = 'mxe.cosbucketname'; UPDATE MAXIMO.MAXPROPVALUE SET PROPVALUE = '<s3 or cos access key>' WHERE PROPNAME = 'mxe.cosaccesskey'; UPDATE MAXIMO.MAXPROPVALUE SET PROPVALUE = '<s3 or cos secret key>' WHERE PROPNAME = 'mxe.cossecretkey'; UPDATE MAXIMO.MAXPROPVALUE SET PROPVALUE = '<s3 or cos endpoint url>' WHERE PROPNAME = 'mxe.cosendpointuri'; UPDATE MAXIMO.MAXPROPVALUE SET PROPVALUE = 'cos:doclinks=https://<maximo url>/maximo/oslc/cosdoclink' WHERE PROPNAME = 'mxe.doclink.path01'; UPDATE MAXIMO.MAXPROPVALUE SET PROPVALUE = 'com.ibm.tivoli.maximo.oslc.provider.COSAttachmentStorage' WHERE PROPNAME = 'mxe.attachmentstorage'; UPDATE MAXIMO.MAXPROPVALUE SET PROPVALUE = 'cos:doclinks' WHERE PROPNAME = 'mxe.doclink.doctypes.topLevelPaths'; UPDATE MAXIMO.MAXPROPVALUE SET PROPVALUE = 'cos:doclinks\default' WHERE PROPNAME = 'mxe.doclink.doctypes.defpath'; UPDATE MAXIMO.MAXPROPVALUE SET PROPVALUE = 'true' WHERE PROPNAME = 'mxe.doclink.securedAttachment';